If some of the many thousands of human volunteers needed to test coronavirus vaccines could have been replaced by digital replicas—one of this year's Top 10 Emerging Technologies—COVID-19 vaccines might have been developed even faster, saving untold lives. Soon virtual clinical trials could be a reality for testing new vaccines and therapies. Other technologies on the list could reduce greenhouse gas emissions by electrifying air travel and enabling sunlight to directly power the production of industrial chemicals. With “spatial” computing, the digital and physical worlds will be integrated in ways that go beyond the feats of virtual reality. And ultrasensitive sensors that exploit quantum processes will set the stage for such applications as wearable brain scanners and vehicles that can see around corners.

These and the other emerging technologies have been singled out by an international steering group of experts. The group, convened by Scientific American and the World Economic Forum, sifted through more than 75 nominations. To win the nod, the technologies must have the potential to spur progress in societies and economies by outperforming established ways of doing things. They also need to be novel (that is, not currently in wide use) yet likely to have a major impact within the next three to five years. The steering group met (virtually) to whittle down the candidates and then closely evaluate the front-runners before making the final decisions. We hope you are as inspired by the reports that follow as we are.

MEDICINE: Microneedles for Painless Injections and Tests

Fewer trips to medical labs make care more accessible

By Elizabeth O'Day

Barely visible needles, or “microneedles,” are poised to usher in an era of pain-free injections and blood testing. Whether attached to a syringe or a patch, microneedles prevent pain by avoiding contact with nerve endings. Typically 50 to 2,000 microns in length (about the depth of a sheet of paper) and one to 100 microns wide (about the width of human hair), they penetrate the dead, top layer of skin to reach into the second layer—the epidermis—consisting of viable cells and a liquid known as interstitial fluid. But most do not reach or only barely touch the underlying dermis, where the nerve endings lie, along with blood and lymph vessels and connective tissue.

Many microneedle syringe and patch applications are already available for administering vaccines, and many more are in clinical trials for use in treating diabetes, cancer and neuropathic pain. Because these devices insert drugs directly into the epidermis or dermis, they deliver medicines much more efficiently than familiar transdermal patches, which rely on diffusion through the skin. This year researchers debuted a novel technique for treating skin disorders such as psoriasis, warts and certain types of cancer: mixing star-shaped microneedles into a therapeutic cream or gel. The needles' temporary, gentle perforation of the skin enhances passage of the therapeutic agent.

Many microneedle products are moving toward commercialization for rapid, painless draws of blood or interstitial fluid and for use in diagnostic testing or health monitoring. Tiny holes made by the needles induce a local change in pressure in the epidermis or dermis that forces interstitial fluid or blood into a collection device. If the needles are coupled to biosensors, the devices can, within minutes, directly measure biological markers indicative of health or disease status, such as glucose, cholesterol, alcohol, drug by-products or immune cells.

Some products would allow the draws to be done at home and mailed to a lab or analyzed on the spot. At least one product has already cleared regulatory hurdles for such use: the U.S. and Europe recently approved the TAP blood collection device from Seventh Sense Biosystems, which enables laypeople to collect a small sample of blood on their own, whether for sending to a lab or for self-monitoring. In research settings, microneedles are also being integrated with wireless communication devices to measure a biological molecule, use the measurement to determine a proper drug dose, and then deliver that dose—an approach that could help realize the promise of personalized medicine.

Microneedle devices could enable testing and treatment to be delivered in underserved areas because they do not require costly equipment or a lot of training to administer. Micron Biomedical has developed one such easy-to-use device: a bandage-sized patch that anyone can apply. Another company called Vaxxas is developing a microneedle vaccine patch that in animal and early human testing elicited enhanced immune responses using a mere fraction of the usual dose. Microneedles can also reduce the risk of transmitting blood-borne viruses and decrease hazardous waste from the disposal of conventional needles.

Tiny needles are not always an advantage; they will not suffice when large doses are needed. Not all drugs can pass through microneedles, nor can all biomarkers be sampled through them. More research is needed to understand how factors such as the age and weight of the patient, the site of injection and the delivery technique influence the effectiveness of microneedle-based technologies. Still, these painless prickers can be expected to significantly expand drug delivery and diagnostics, and new uses will arise as investigators devise ways to use them in organs beyond the skin.

CHEMICAL ENGINEERING: Sun-Powered Chemistry

Visible light can drive processes that convert carbon dioxide into common materials

By Javier Garcia Martinez

The manufacture of many chemicals important to human health and comfort consumes fossil fuels, thereby contributing to extractive processes, carbon dioxide emissions and climate change. A new approach employs sunlight to convert waste carbon dioxide into these needed chemicals, potentially reducing emissions in two ways: by using the unwanted gas as a raw material and sunlight, not fossil fuels, as the source of energy needed for production.

This process is becoming increasingly feasible thanks to advances in sunlight-activated catalysts, or photocatalysts. In recent years investigators have developed photocatalysts that break the resistant double bond between carbon and oxygen in carbon dioxide. This is a critical first step in creating “solar” refineries that produce useful compounds from the waste gas—including “platform” molecules that can serve as raw materials for the synthesis of such varied products as medicines, detergents, fertilizers and textiles.

Photocatalysts are typically semiconductors, which require high-energy ultraviolet light to generate the electrons involved in the transformation of carbon dioxide. Yet ultraviolet light is both scarce (representing just 5 percent of sunlight) and harmful. The development of new catalysts that work under more abundant and benign visible light has therefore been a major objective. That demand is being addressed by careful engineering of the composition, structure and morphology of existing catalysts, such as titanium dioxide. Although it efficiently converts carbon dioxide into other molecules solely in response to ultraviolet light, doping it with nitrogen greatly lowers the energy required to do so. The altered catalyst now needs only visible light to yield widely used chemicals such as methanol, formaldehyde and formic acid—collectively important in the manufacture of adhesives, foams, plywood, cabinetry, flooring and disinfectants.

At the moment, solar chemical research is occurring mainly in academic laboratories, including at the Joint Center for Artificial Photosynthesis, run by the California Institute of Technology in partnership with Lawrence Berkeley National Laboratory; a Netherlands-based collaboration of universities, industry and research and technology organizations called the Sunrise consortium; and the department of heterogeneous reactions at the Max Planck Institute for Chemical Energy Conversion in Mülheim, Germany. Some start-ups are working on a different approach to transforming carbon dioxide into useful substances—namely, applying electricity to drive the chemical reactions. Using electricity to power the reactions would obviously be less environmentally friendly than using sunlight if the electricity were derived from fossil-fuel combustion, but reliance on photovoltaics could overcome that drawback.

The advances occurring in the sunlight-driven conversion of carbon dioxide into chemicals are sure to be commercialized and further developed by start-ups or other companies in the coming years. Then the chemical industry—by transforming what today is waste carbon dioxide into valuable products—will move a step closer to becoming part of a true, waste-free, circular economy, as well as helping to make the goal of generating negative emissions a reality.

HEALTH CARE: Virtual Patients

Replacing humans with simulations could make clinical trials faster and safer

By Daniel E. Hurtado and Sophia M. Velastegui

Every day, it seems, some new algorithm enables computers to diagnose a disease with unprecedented accuracy, renewing predictions that computers will soon replace doctors. What if computers could replace patients as well? If virtual humans could have replaced real people in some stages of a coronavirus vaccine trial, for instance, it could have sped development of a preventive tool and slowed down the pandemic. Similarly, potential vaccines that weren't likely to work could have been identified early, slashing trial costs and avoiding testing poor vaccine candidates on living volunteers. These are some of the benefits of “in silico medicine,” or the testing of drugs and treatments on virtual organs or body systems to predict how a real person will respond to the therapies. For the foreseeable future, real patients will be needed in late-stage studies, but in silico trials will make it possible to conduct quick and inexpensive first assessments of safety and efficacy, drastically reducing the number of live human subjects required for experimentation.

With virtual organs, the modeling begins by feeding anatomical data drawn from noninvasive high-resolution imaging of an individual's actual organ into a complex mathematical model of the mechanisms that govern that organ's function. Algorithms running on powerful computers resolve the resulting equations and unknowns, generating a virtual organ that looks and behaves like the real thing.

In silico clinical trials are already underway to an extent. The U.S. Food and Drug Administration, for instance, is using computer simulations in place of human trials for evaluating new mammography systems. The agency has also published guidance for designing trials of drugs and devices that include virtual patients.

Beyond speeding results and mitigating the risks of clinical trials, in silico medicine can be used in place of risky interventions that are required for diagnosing or planning treatment of certain medical conditions. For example, HeartFlow Analysis, a cloud-based service approved by the FDA, enables clinicians to identify coronary artery disease based on CT images of a patient's heart. The HeartFlow system uses these images to construct a fluid dynamic model of the blood running through the coronary blood vessels, thereby identifying abnormal conditions and their severity. Without this technology, doctors would need to perform an invasive angiogram to decide whether and how to intervene. Experimenting on digital models of individual patients can also help personalize therapy for any number of conditions and is already used in diabetes care.

The philosophy behind in silico medicine is not new. The ability to create and simulate the performance of an object under hundreds of operating conditions has been a cornerstone of engineering for decades, such as for designing electronic circuits, airplanes and buildings. Various hurdles remain to its widespread implementation in medical research and treatment.

First, the predictive power and reliability of this technology must be confirmed, and that will require several advances. Those include the generation of high-quality medical databases from a large, ethnically diverse patient base that has women as well as men; refinement of mathematical models to account for the many interacting processes in the body; and further modification of artificial-intelligence methods that were developed primarily for computer-based speech and image recognition and need to be extended to provide biological insights. The scientific community and industry partners are addressing these issues through initiatives such as the Living Heart Project by Dassault Systèmes, the Virtual Physiological Human Institute for Integrative Biomedical Research and Microsoft's Healthcare NExT.

In recent years the FDA and European regulators have approved some commercial uses of computer-based diagnostics, but meeting regulatory demands requires considerable time and money. Creating demand for these tools is challenging given the complexity of the health care ecosystem. In silico medicine must be able to deliver cost-effective value for patients, clinicians and health care organizations to accelerate their adoption of the technology.

COMPUTING: Spatial Computing

The next big thing beyond virtual and augmented reality

By Corinna E. Lathan and Geoffrey Ling

Imagine Martha, an octogenarian who lives independently and uses a wheelchair. All objects in her home are digitally catalogued; all sensors and the devices that control objects have been Internet-enabled; and a digital map of her home has been merged with the object map. As Martha moves from her bedroom to the kitchen, the lights switch on, and the ambient temperature adjusts. The chair will slow if her cat crosses her path. When she reaches the kitchen, the table moves to improve her access to the refrigerator and stove, then moves back when she is ready to eat. Later, if she begins to fall when getting into bed, her furniture shifts to protect her, and an alert goes to her son and the local monitoring station.

The “spatial computing” at the heart of this scene is the next step in the ongoing convergence of the physical and digital worlds. It does everything virtual-reality and augmented-reality apps do: digitize objects that connect via the cloud; allow sensors and motors to react to one another; and digitally represent the real world. Then it combines these capabilities with high-fidelity spatial mapping to enable a computer “coordinator” to track and control the movements and interactions of objects as a person navigates through the digital or physical world. Spatial computing will soon bring human-machine and machine-machine interactions to new levels of efficiency in many walks of life, among them industry, health care, transportation and the home. Major companies, including Microsoft and Amazon, are heavily invested in the technology.

As is true of virtual and augmented reality, spatial computing builds on the “digital twin” concept familiar from computer-aided design (CAD). In CAD, engineers create a digital representation of an object. This twin can be used variously to 3-D-print the object, design new versions of it, provide virtual training on it or join it with other digital objects to create virtual worlds. Spatial computing makes digital twins not just of objects but of people and locations—using GPS, lidar (light detection and ranging), video and other geolocation technologies to create a digital map of a room, a building or a city. Software algorithms integrate this digital map with sensor data and digital representations of objects and people to create a digital world that can be observed, quantified and manipulated and that can also manipulate the real world.

In the medical realm, consider this futuristic scenario: A paramedic team is dispatched to an apartment in a city to handle a patient who might need emergency surgery. As the system sends the patient's medical records and real-time updates to the technicians' mobile devices and to the emergency department, it also determines the fastest driving route to reach the person. Red lights hold crossing traffic, and as the ambulance pulls up, the building's entry doors open, revealing an elevator already in position. Objects move out of the way as the medics hurry in with their stretcher. As the system guides them to the ER via the quickest route, a surgical team uses spatial computing and augmented reality to map out the choreography of the entire operating room or plan a surgical path through this patient's body.

Industry has already embraced the integration of dedicated sensors, digital twins and the Internet of Things to optimize productivity and will likely be an early adopter of spatial computing. The technology can add location-based tracking to a piece of equipment or an entire factory. By donning augmented-reality headsets or viewing a projected holographic image that displays not only repair instructions but also a spatial map of the machine components, workers can be guided through and around the machine to fix it as efficiently as possible—shrinking down time and its costs. Or if a technician were engaging with a virtual-reality version of a true remote site to direct several robots as they built a factory, spatial-computing algorithms could help optimize the safety, efficiency and quality of the work by improving, for example, the coordination of the robots and the selection of tasks assigned to them. In a more common scenario, fast-food and retail companies could combine spatial computing with standard industrial engineering techniques (such as time-motion analyses) to enhance the efficient flow of work.

MEDICINE: Digital Medicine

Apps that diagnose and even treat what ails us

By P. Murali Doraiswamy

Could the next prescription from your doctor be for an app? A raft of apps in use or under development can now detect or monitor mental and physical disorders autonomously or directly administer therapies. Collectively known as digital medicines, the software can both enhance traditional medical care and support patients when access to health care is limited—a need that the COVID-19 crisis has exacerbated.

Many detection aids rely on mobile devices to record such features as users' voices, locations, facial expressions, exercise, sleep and texting activity; then they apply artificial intelligence to flag the possible onset or exacerbation of a condition. Some smart watches, for instance, contain a sensor that automatically detects and alerts people to atrial fibrillation, a dangerous heart rhythm. Similar tools are in the works to screen for breathing disorders, depression, Parkinson's, Alzheimer's, autism and other conditions. These detection, or “digital phenotyping,” aids will not replace a doctor any time soon but can be helpful partners in highlighting concerns that need follow-up. Detection aids can also take the form of ingestible, sensor-bearing pills, called microbioelectronic devices. Some are being developed to detect things such as cancerous DNA, gases emitted by gut microbes, stomach bleeds, body temperature and oxygen levels. The sensors relay the data to apps for recording.

The therapeutic apps are likewise designed for a variety of disorders. The first prescription digital therapeutic to gain FDA approval was Pear Therapeutics's reSET technology for substance use disorder. Okayed in 2018 as an adjunct to care from a health professional, reSET provides 24/7 cognitive-behavioral therapy (CBT) and gives clinicians real-time data on their patients' cravings and triggers. Somryst, an insomnia therapy app, and EndeavorRX, the first therapy delivered as a video game for children with attention deficit hyperactivity disorder, received FDA clearance earlier this year.

Looking ahead, Luminopia, a children's health start-up, has designed a virtual- reality app to treat amblyopia (lazy eye)—an alternative to an eye patch. One day college students might receive alerts from a smart watch suggesting they seek help for mild depression after the watch detects changes in speech and socializing patterns; then they might turn to the Woebot chat bot for CBT counseling.

Not all wellness apps qualify as digital medicines. For the most part, those intended to diagnose or treat disorders must be proved safe and effective in clinical trials and earn regulatory approval; some may need a doctor's prescription. (In April, to help with the COVID-19 pandemic, the FDA made temporary exceptions for low-risk mental health devices.)

COVID-19 highlighted the importance of digital medicine. As the outbreak unfolded, dozens of apps for detecting depression and providing counseling became available. Additionally, hospitals and government agencies across the globe deployed variations of Microsoft's Healthcare Bot service. Instead of waiting on hold with a call center or risking a trip to the emergency room, people concerned about experiencing, say, coughing and fever could chat with a bot, which used natural-language processing to ask about symptoms and, based on AI analyses, could describe possible causes or begin a telemedicine session for assessment by a physician. By late April the bots had already fielded more than 200 million inquiries about COVID symptoms and treatments. Such interventions greatly reduced the strain on health systems.

Clearly, society must move into the future of digital medicine with care—ensuring that the tools undergo rigorous testing, protect privacy and integrate smoothly into doctors' workflows. With such protections in place, digital phenotyping and therapeutics could save health care costs by flagging unhealthy behaviors and helping people to make changes before diseases set in. Moreover, applying AI to the big data sets that will be generated by digital phenotyping and therapeutic apps should help to personalize patient care. The patterns that emerge will also provide researchers with novel ideas for how best to build healthier habits and prevent disease.

TRANSPORTATION: Electric Aviation

Enabling air travel to decarbonize

By Katherine Hamilton and Tammy Ma

In 2019 air travel accounted for 2.5 percent of global carbon emissions, a number that could triple by 2050. While some airlines have started offsetting their contributions to atmospheric carbon, significant cutbacks are still needed. Electric airplanes could provide the scale of transformation required, and many companies are racing to develop them. Not only would electric propulsion motors eliminate direct carbon emissions, they could reduce fuel costs by up to 90 percent, maintenance by up to 50 percent and noise by nearly 70 percent.

Among the companies working on electric flight are Airbus, Ampaire, MagniX and Eviation. All are flight-testing aircraft meant for private, corporate or commuter trips and are seeking certification from the U.S. Federal Aviation Administration. Cape Air, one of the largest regional airlines, expects to be among the first customers, with plans to buy the Alice nine-passenger electric aircraft from Eviation. Cape Air's CEO Dan Wolf has said he is interested not only in the environmental benefits but also in potential savings on operation costs. Electric motors generally have longer life spans than the hydrocarbon-fueled engines in his current aircraft; they need an overhaul at 20,000 hours versus 2,000.

Forward-propulsion engines are not the only ones going electric. NASA's X-57 Maxwell electric plane, under development, replaces conventional wings with shorter ones that feature a set of distributed electric propellers. On conventional jets, wings must be large enough to provide lift when a craft is traveling at a low speed, but the large surface area adds drag at higher speeds. Electric propellers increase lift during takeoff, allowing for smaller wings and overall higher efficiency.

For the foreseeable future, electric planes will be limited in how far they can travel. Today's best batteries put out far less power by weight than traditional fuels: an energy density of 250 watt-hours per kilogram versus 12,000 watt-hours per kilogram for jet fuel. The batteries required for a given flight are therefore far heavier than standard fuel and take up more space. Approximately half of all flights globally are fewer than 800 kilometers, which is expected to be within the range of battery-powered electric aircraft by 2025.

Electric aviation faces cost and regulatory hurdles, but investors, incubators, corporations and governments excited by the progress of this technology are investing significantly in its development: some $250 million flowed to electric aviation start-ups between 2017 and 2019. Currently roughly 170 electric airplane projects are underway. Most electric airplanes are designed for private, corporate and commuter travel, but Airbus says it plans to have 100-passenger versions ready to fly by 2030.

INFRASTRUCTURE: Lower-Carbon Cement

Construction material that combats climate change

By Mariette DiChristina

Concrete, the most widely used human-made material, shapes much of our built world. The manufacture of one of its key components, cement, creates a substantial yet underappreciated amount of human-produced carbon dioxide: up to 8 percent of the global total, according to London-based think tank Chatham House. It has been said that if cement production were a country, it would be the third-largest emitter after China and the U.S. Currently four billion tons of cement are produced every year, but because of increasing urbanization, that figure is expected to rise to five billion tons in the next 30 years, Chatham House reports. The emissions from cement production result from the fossil fuels used to generate heat for cement formation, as well as from the chemical process in a kiln that transforms limestone into clinker, which is then ground and combined with other materials to make cement.

Although the construction industry is typically resistant to change for a variety of reasons—safety and reliability among them—the pressure to decrease its contributions to climate change may well accelerate disruption. In 2018 the Global Cement and Concrete Association, which represents about 30 percent of worldwide production, announced the industry's first Sustainability Guidelines, a set of key measurements such as emissions and water usage intended to track performance improvements and make them transparent.

Meanwhile a variety of lower-carbon approaches are being pursued, with some already in practice. Start-up Solidia in Piscataway, N.J., is employing a chemical process licensed from Rutgers University that has cut 30 percent of the carbon dioxide usually released in making cement. The recipe uses more clay, less limestone and less heat than typical processes. CarbonCure in Dartmouth, Nova Scotia, stores carbon dioxide captured from other industrial processes in concrete through mineralization rather than releasing it into the atmosphere as a by-product. Montreal-based CarbiCrete ditches the cement in concrete altogether, replacing it with a by-product of steelmaking called steel slag. And Norcem, a major producer of cement in Norway, is aiming to turn one of its factories into the world's first zero-emissions cement-making plant. The facility already uses alternative fuels from wastes and intends to add carbon capture and storage technologies to remove emissions entirely by 2030.

Additionally, researchers have been incorporating bacteria into concrete formulations to absorb carbon dioxide from the air and to improve its properties. Start-ups pursuing “living” building materials include BioMason in Raleigh, N.C., which “grows” cementlike bricks using bacteria and particles called aggregate. And in an innovation funded by DARPA and published in February in the journal Matter, researchers at the University of Colorado Boulder employed photosynthetic microbes called cyanobacteria to build a lower-carbon concrete. They inoculated a sand-hydrogel scaffold with bacteria to create bricks with an ability to self-heal cracks.

These bricks could not replace cement and concrete in all of today's applications. They could, however, someday take the place of light-duty load-bearing materials, such as those used for pavers, facades and temporary structures.

COMPUTING: Quantum Sensing

High-precision metrology based on the peculiarities of the subatomic realm

By Carlo Ratti

Quantum computers get all the hype, but quantum sensors could be equally transformative, enabling autonomous vehicles that can “see” around corners, underwater navigation systems, early-warning systems for volcanic activity and earthquakes, and portable scanners that monitor a person's brain activity during daily life.

Quantum sensors reach extreme levels of precision by exploiting the quantum nature of matter—using the difference between, for example, electrons in different energy states as a base unit. Atomic clocks illustrate this principle. The world time standard is based on the fact that electrons in cesium 133 atoms complete a specific transition 9,192,631,770 times a second; this is the oscillation that other clocks are tuned against. Other quantum sensors use atomic transitions to detect minuscule changes in motion and tiny differences in gravitational, electric and magnetic fields.

There are other ways to build a quantum sensor, however. For example, researchers at the University of Birmingham in England are working to develop free-falling, supercooled atoms to detect tiny changes in local gravity. This kind of quantum gravimeter would be capable of detecting buried pipes, cables and other objects that today can be reliably found only by digging. Seafaring ships could use similar technology to detect underwater objects.

Most quantum-sensing systems remain expensive, oversized and complex, but a new generation of smaller, more affordable sensors should open up new applications. Last year researchers at the Massachusetts Institute of Technology used conventional fabrication methods to put a diamond-based quantum sensor on a silicon chip, squeezing multiple, traditionally bulky components onto a square a few tenths of a millimeter wide. The prototype is a step toward low-cost, mass-produced quantum sensors that work at room temperature and that could be used for any application that involves taking fine measurements of weak magnetic fields.

Quantum systems remain extremely susceptible to disturbances, which could limit their application to controlled environments. But governments and private investors are throwing money at this and other challenges, including those of cost, scale and complexity; the U.K., for example, has put £315 million into the second phase of its National Quantum Computing Program (2019–2024). Industry analysts expect quantum sensors to reach the market in the next three to five years, with an initial emphasis on medical and defense applications.

ENERGY: Green Hydrogen

Zero-carbon energy to supplement wind and solar

By Jeff Carbeck

When hydrogen burns, the only by-product is water—which is why hydrogen has been an alluring zero-carbon energy source for decades. Yet the traditional process for producing hydrogen, in which fossil fuels are exposed to steam, is not even remotely zero-carbon. Hydrogen produced this way is called gray hydrogen; if the CO2 is captured and sequestered, it is called blue hydrogen.

Green hydrogen is different. It is produced through electrolysis, in which machines split water into hydrogen and oxygen, with no other by-products. Historically, electrolysis required so much electricity that it made little sense to produce hydrogen that way. The situation is changing for two reasons. First, significant amounts of excess renewable electricity have become available at grid scale; rather than storing excess electricity in arrays of batteries, the extra electricity can be used to drive the electrolysis of water, “storing” the electricity in the form of hydrogen. Second, electrolyzers are getting more efficient.

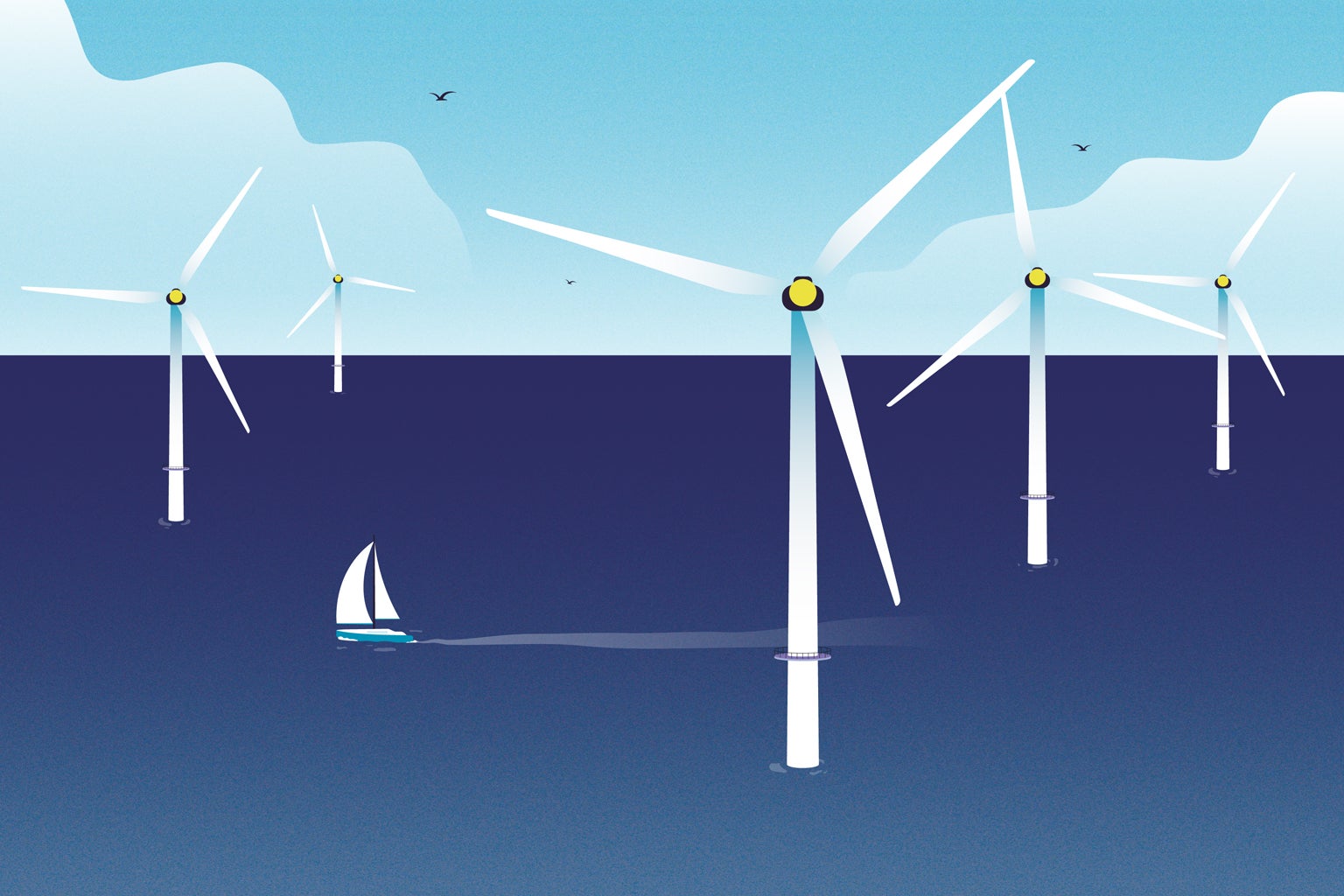

Companies are working to develop electrolyzers that can produce green hydrogen as cheaply as gray or blue hydrogen, and analysts expect them to reach that goal in the next decade. Meanwhile energy companies are starting to integrate electrolyzers directly into renewable power projects. For example, a consortium of companies behind a project called Gigastack plan to equip Ørsted's Hornsea Two offshore wind farm with 100 megawatts of electrolyzers to generate green hydrogen at an industrial scale.

Current renewable technologies such as solar and wind can decarbonize the energy sector by as much as 85 percent by replacing gas and coal with clean electricity. Other parts of the economy, such as shipping and manufacturing, are harder to electrify because they often require fuel that is high in energy density or heat at high temperatures. Green hydrogen has potential in these sectors. The Energy Transitions Commission, an industry group, says green hydrogen is one of four technologies necessary for meeting the Paris Agreement goal of abating more than 10 gigatons of carbon dioxide a year from the most challenging industrial sectors, among them mining, construction and chemicals.

Although green hydrogen is still in its infancy, countries—especially those with cheap renewable energy—are investing in the technology. Australia wants to export hydrogen that it would produce using its plentiful solar and wind power. Chile has plans for hydrogen in the country's arid north, where solar electricity is abundant. China aims to put one million hydrogen fuel–cell vehicles on the road by 2030.

Similar projects are underway in South Korea, Malaysia, Norway and the U.S., where the state of California is working to phase out fossil-fuel buses by 2040. And the European Commission's recently published 2030 hydrogen strategy calls for increasing hydrogen capacity from 0.1 gigawatt today to 500 gigawatts by 2050. All of which is why, earlier this year, Goldman Sachs predicted that green hydrogen will become a $12-trillion market by 2050.

SYNTHETIC BIOLOGY: Whole-Genome Synthesis

Next-level cell engineering

By Andrew Hessel and Sang Yup Lee

Early in the COVID-19 pandemic, scientists in China uploaded the virus's genetic sequence (the blueprint for its production) to genetic databases. A Swiss group then synthesized the entire genome and produced the virus from it—essentially teleporting the virus into their laboratory for study without having to wait for physical samples. Such speed is one example of how whole-genome printing is advancing medicine and other endeavors.

Whole-genome synthesis is an extension of the booming field of synthetic biology. Researchers use software to design genetic sequences that they produce and introduce into a microbe, thereby reprogramming the microbe to do desired work—such as making a new medicine. So far genomes mainly get light edits. But improvements in synthesis technology and software are making it possible to print ever larger swaths of genetic material and to alter genomes more extensively.

Viral genomes, which are tiny, were produced first, starting in 2002 with the poliovirus's roughly 7,500 nucleotides, or code letters. As with the coronavirus, these synthesized viral genomes have helped investigators gain insight into how the associated viruses spread and cause disease. Some are being designed to serve in the production of vaccines and immunotherapies.

Writing genomes that contain millions of nucleotides, as in bacteria and yeast, has become tractable as well. In 2019 a team printed a version of the Escherichia coli genome that made room for codes that could force the bacterium to do scientists' bidding. Another team has produced an initial version of the brewer's yeast genome, which consists of almost 11 million code letters. Genome design and synthesis at this scale will allow microbes to serve as factories for producing not only drugs but any number of substances. They could be engineered to sustainably produce chemicals, fuels and novel construction materials from nonfood biomass or even waste gases such as carbon dioxide.

Many scientists want the ability to write larger genomes, such as those from plants, animals and humans. Getting there requires greater investment in design software (most likely incorporating artificial intelligence) and in faster, cheaper methods for synthesizing and assembling DNA sequences at least millions of nucleotides long. With sufficient funding, the writing of genomes on the billion-nucleotide scale could be a reality before the end of this decade. Investigators have many applications in mind, including the design of plants that resist pathogens and an ultrasafe human cell line—impervious, say, to virus infections, cancer and radiation—that could be the basis for cell-based therapies or for biomanufacturing. The ability to write our own genome will inevitably emerge, enabling doctors to cure many, if not all, genetic diseases.

Of course, whole-genome engineering could be misused, with the chief fear being weaponized pathogens or their toxin-generating components. Scientists and engineers will need to devise a comprehensive biological security filter: a set of existing and novel technologies able to detect and monitor the spread of new threats in real time. Investigators will need to invent testing strategies that can scale rapidly. Critically, governments around the world must cooperate much more than they do now.

The Genome Project-write, a consortium formed in 2016, is positioned to facilitate this safety net. The project includes hundreds of scientists, engineers and ethicists from more than a dozen countries who develop technologies, share best practices, carry out pilot projects, and explore ethical, legal and societal implications.

Click here to view the emerging technologies steering group.