Luke Miller, a cognitive neuroscientist, was toying with a curtain rod in his apartment when he was struck by a strange realization. When he hit an object with the rod, even without looking, he could tell where it was making contact like it was a sensory extension of his body. “That’s kind of weird,” Miller recalls thinking to himself. “So I went [to the lab], and we played around with it in the lab.”

Sensing touch through tools is not a new concept, though it has not been extensively investigated. In the 17th century, philosopher René Descartes discussed the ability of blind people to sense their surroundings through their walking cane. While scientists have researched tool use extensively, they typically focused on how people move the tools. “They, for the most part, neglected the sensory aspect of tool use,” Miller says.

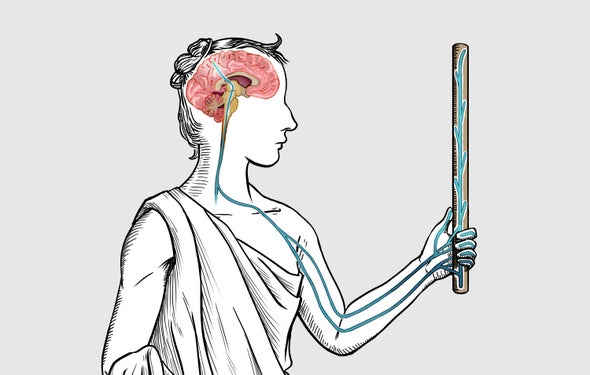

In a 2018 Nature study, Miller and his colleagues at Claude Bernard Lyon 1 University in France reported that humans are actually quite good at pinpointing where an object comes into contact with a handheld tool using touch alone, as if the object were touching their own skin. A tool is not innervated like our skin, so how does our brain know when and where it is touched? Results in a follow-up study, published in December in Current Biology, reveal that the brain regions involved with sensing touch on the body similarly processes it on the tool. “The tool is being treated like a sensory extension of your body,” Miller says.

In the initial experiment, the researchers asked 16 right-handed subjects to determine where they felt touches on a one-meter-long wooden rod. In a total of 400 trials, each subject compared the locations of two touches made on the rod: If they were felt in different locations, participants did not respond. If they were in the same location, the people in the study tapped a foot pedal to indicate whether the touches were close or far from their hand. Even without any experience with the rod or feedback on their performance, the participants were, on average, 96 percent accurate.

During the experiment, researchers recorded subjects’ cortical brain activity using scalp electrodes and found that the cortex rapidly processed where the tool was touched. In trials in which the rod was touched in the same location twice in a row, there was a marked suppression of neural responses in brain areas previously shown to identify touch on the body, including the primary somatosensory (touch) cortex and the posterior parietal cortex.

There is evidence that when the sensory brain regions are presented with the same stimulus repeatedly, the responses of the underlying neural population gets suppressed. This repetition suppression can be measured and used as a “time stamp” to signify when a stimulus is extracted in the brain.

When the team tested some of the same subjects with touches on their arm instead of the rod, it observed similar repetition suppression in the same brain regions on similar time scales. The somatosensory cortex was suppressed in 52 milliseconds (about one twentieth of a second) after contact on both the rod and the arm. At 80 milliseconds, that activity suppression spread throughout the posterior parietal cortex. These results indicate the neural mechanisms for detecting touch location on tools “are remarkably similar to what happens to localize touch on your own body,” says Alessandro Farnè, a neuroscientist at the Lyon Neuroscience Research Center in France and senior author of both studies.

Interestingly, after each contact, the rod vibrates for about 100 milliseconds, Miller says. “So by the time the rod is done vibrating in the hand, you’ve already extracted the location dozens of milliseconds before that,” he adds. The vibrations on the rod are detected by touch sensors embedded in our skin called Pacinian receptors, which then relays neural signals up to the somatosensory cortex. Computer simulations of Pacinian activity in the hand showed that information about rod contact location could be extracted efficiently within 20 milliseconds.

The vibrations on the rod may provide the key information needed for touch localization. Repeating the same rod experiment, the researchers tested a patient who lost proprioception in her right arm, meaning she could not sense the limb’s location in space. She could still sense superficial touch, however, and she was able to localize where the rod was touched when held in both hands and had similar brain activity as the healthy patients during the task. That finding “suggests quite convincingly that vibration conveyed through the touch, which is spared in the patient, is sufficient for the brain to locate touches on the rod,” Farnè says.

Taken together, these results indicate that people could locate touches on a tool quickly and efficiently using the same neural processes for detecting touch on the body. While Farnè emphasizes that no one in the studies thought the tool had “become part of their own body,” he says the work indicates the subjects experienced sensory embodiment, “in which the brain repurposes strategies for dealing with objects by reusing what it knows about the body.”

“This is really beautiful, comprehensive and thoughtful work,” says Scott Frey, a cognitive neuroscientist researching neuroprosthetics at the University of Missouri. Frey, who was not involved with the studies, believes that the results could help inform the design of better prostheses because it suggests that “insensate objects can become, potentially, ways of detecting information from the world and relaying it toward the somatosensory systems,” he says. “And that’s not something that I think people in the world of prosthetics design really thought about. But maybe this suggests that they should. And that’s kind of a neat, novel idea that could come out of it.”