In September 2019 a swarm of 18 bomb-laden drones and seven cruise missiles overwhelmed Saudi Arabia's advanced air defenses to crash into the Abqaiq and Khurais oil fields and their processing facilities. The surprisingly sophisticated attack, which Yemen's Houthi rebels claimed responsibility for, halved the nation's output of crude oil and natural gas and forced an increase in global oil prices. The drones were likely not fully autonomous, however: they did not communicate with one another to pick their own targets, such as specific storage tanks or refinery buildings. Instead each drone appears to have been preprogrammed with precise coordinates to which it navigated over hundreds of kilometers by means of a satellite positioning system.

Fully autonomous weapons systems (AWSs) may be operating in war theaters even as you read this article, however. Turkey has announced plans to deploy a fleet of autonomous Kargu quadcopters against Syrian forces in early 2020, and Russia is also developing aerial swarms for that region. Once launched, an AWS finds, tracks, selects and attacks targets with violent force, all without human supervision.

Autonomous weapons are not self-aware, humanoid “Terminator” robots conspiring to take over; they are computer-controlled tanks, planes, ships and submarines. Even so, they represent a radical change in the nature of warfare. Humans are outsourcing the decision to kill to a machine—with no one watching to ascertain the legitimacy of an attack before it is carried out. Since the mid-2000s, when the U.S. Department of Defense triggered a global artificial-intelligence arms race by signaling its intent to develop autonomous weapons for all branches of the armed forces, every major power and several lesser ones have been striving to acquire these systems. According to U.S. Secretary of Defense Mark Esper, China is already exporting AWSs to the Middle East.

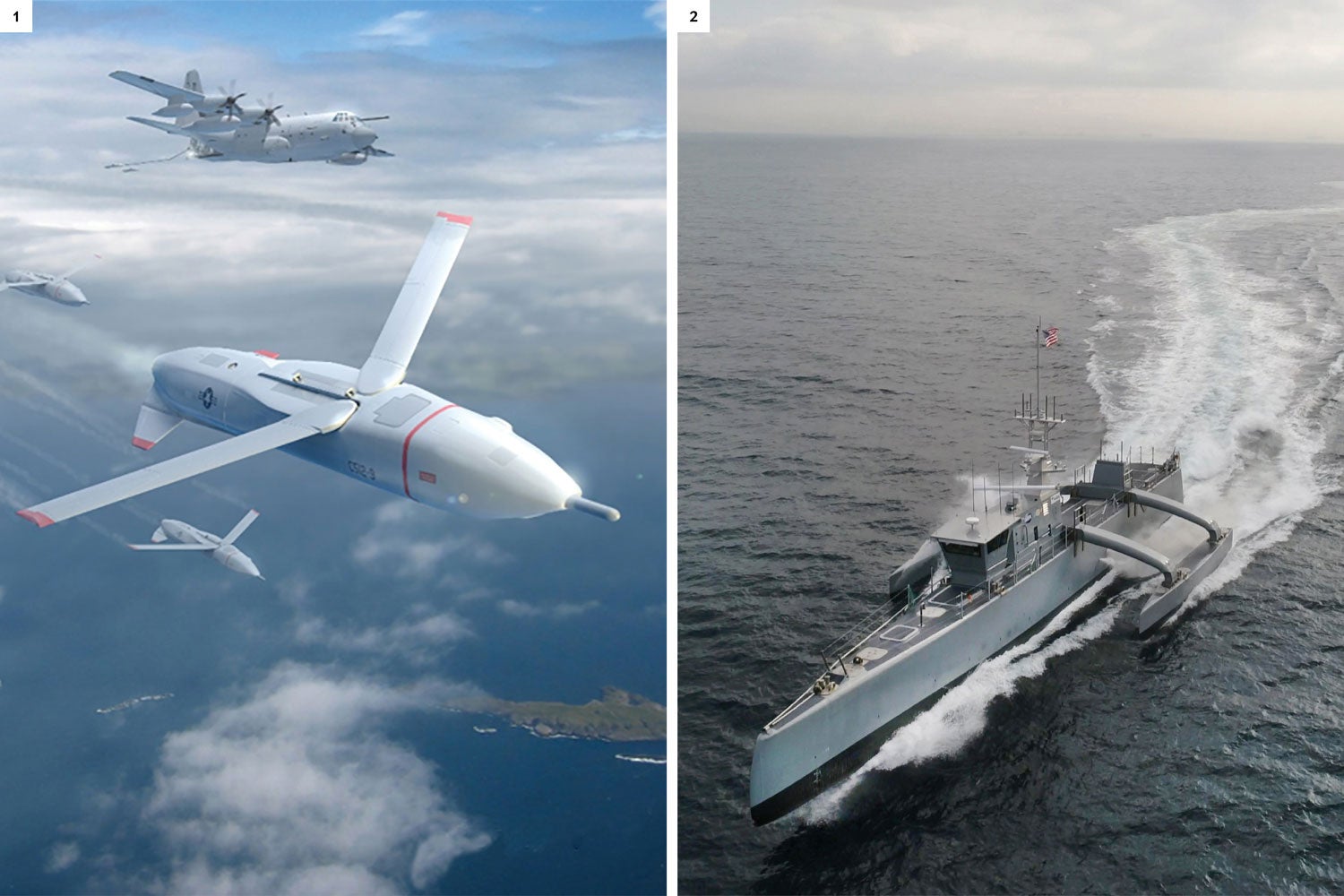

The military attractions of autonomous weapons are manifold. For example, the U.S. Navy's X-47B, an unmanned fighter jet that can land and take off from aircraft carriers even in windy conditions and refuel in the air, will have 10 times the reach of piloted fighter jets. The U.S. has also developed an unmanned transoceanic warship called Sea Hunter, to be accompanied by a flotilla of DASH (Distributed Agile Submarine Hunting) submarines. In January 2019 the Sea Hunter traveled from San Diego to Hawaii and back, demonstrating its suitability for use in the Pacific. Russia is automating its state-of-the-art T-14 Armata tank, presumably for deployment at the European border; meanwhile weapons manufacturer Kalashnikov has demonstrated a fully automated combat module to be mounted on existing weapons systems (such as artillery guns and tanks) to enable them to sense, choose and attack targets. Not to be outdone, China is working on AI-powered tanks and warships, as well as a supersonic autonomous air-to-air combat aircraft called Anjian, or Dark Sword, that can twist and turn so sharply and quickly that the g-force generated would kill a human pilot.

Given such fierce competition, the focus is inexorably shifting to ever faster machines and autonomous drone swarms, which can overwhelm enemy defenses with a massive, multipronged and coordinated attack. Much of the push toward such weapons comes from defense contractors eyeing the possibility of large profits, but high-ranking military commanders nervous about falling behind in the artificial-intelligence arms race also play a significant role. Some nations, in particular the U.S. and Russia, are looking only at the potential military advantages of autonomous systems—a blinkered view that prevents them from considering the disturbing scenarios that can unfold when rivals catch up.

As a roboticist, I recognized the necessity of meaningful human control over weapons systems when I first learned of the plans to build AWSs. We are facing a new era in warfare, much like the dawn of the atomic age. It does not take a historian to realize that once a new class of weapon is in the arsenals of military powers, its use will incrementally expand, placing humankind at risk of conflicts that can barely be imagined today. In 2009 I and three other academics set up the International Committee for Robot Arms Control, which later teamed up with other nongovernmental organizations (NGOs) to form the Campaign to Stop Killer Robots. Now a coalition of 130 NGOs from 60 countries, the campaign seeks to persuade the United Nations to negotiate a legally binding treaty that would prohibit the development, testing and production of weapons that select targets and attack them with violent force without meaningful human control.

Time to Think

The ultimate danger of systems for warfare that take humans out of the decision-making loop is illustrated by the true story of “the man who saved the world.” In 1983 Lieutenant Colonel Stanislav Petrov was on duty at a Russian nuclear early-warning center when his computer sounded a loud alarm and the word “LAUNCH” appeared in bold red letters on his screen—indications that a U.S. nuclear missile was fast approaching. Petrov held his nerve and waited. A second launch warning rang out, then a third and a fourth. With the fifth, the red “LAUNCH” on his screen changed to “MISSILE STRIKE.” Time was ticking away for the U.S.S.R. to retaliate, but Petrov continued his deliberation. “Then I made my decision,” Petrov said in a BBC interview in 2013. “I would not trust the computer.” He reported the nuclear attack as a false alarm—even though he could not be certain. As it turned out, the onboard computing system on the Soviet satellites had misclassified sunlight reflecting off clouds as the engines of intercontinental ballistic missiles.

This tale illustrates the vital role of deliberative human decision-making in war: given those inputs, an autonomous system would have decided to fire. But making the right call takes time. A hundred years' worth of psychological research tells us that if we do not take at least a minute to think things over, we will overlook contradictory information, neglect ambiguity, suppress doubt, ignore the absence of confirmatory evidence, invent causes and intentions, and conform with expectations. Alarmingly, an oft-cited rationale for AWSs is that conflicts are unfolding too quickly for humans to be making the decisions.

“It's a lot faster than me,” Bruce Jette, a U.S. Army acquisitions officer, said last October to Defense News, referring to a targeting system for tanks. “I can't see and think through some of the things it can calculate nearly as fast as it can.” In fact, speed is a key reason that partially autonomous weapons are already in use for some defensive operations, which require that the detection of, evaluation of and response to a threat be completed within seconds. These systems—variously known as SARMO (Sense and React to Military Objects), automated and automatic weapons systems—include Israel's Iron Dome for protecting the country from rockets and missiles; the U.S. Phalanx cannon, mounted on warships to guard against attacks from antiship missiles or helicopters; and the German NBS Mantis gun, used to shoot down smaller munitions such as mortar shells. They are localized, are defensive, do not target humans and are switched on by humans in an emergency—which is why they are not considered fully autonomous.

The distinction is admittedly fine, and weapons on the cusp of SARMO and AWS technology are already in use. Israel's Harpy and Harop aerial drones, for instance, are explosive-laden rockets launched prior to an air attack to clear the area of antiaircraft installations. They cruise around hunting for radar signals, determine whether the signals come from friend or foe and, if the latter, dive-bomb on the assumption that the radar is connected to an antiaircraft installation. In May 2019 secretive Israeli drones—according to one report, Harops—blew up Russian-made air-defense systems in Syria.

These drones are “loitering munitions” and speed up only when attacking, but several fully autonomous systems will range in speed from fast subsonic to supersonic to hypersonic. For example, the U.S.'s Defense Advanced Research Projects Agency (DARPA) has tested the Falcon unmanned hypersonic aircraft at speeds around 20 times the speed of sound—approximately 21,000 kilometers per hour.

In addition to speed, militaries are pursuing “force multiplication”—increases in the destructive capacity of a weapons system—by means of autonomous drones that cooperate like wolves in a pack, communicating with one another to choose and hunt individual targets. A single human can launch a swarm of hundreds (or even thousands) of armed drones into the air, on the land or water, or under the sea. Once the AWS has been deployed, the operator becomes at best an observer who could abort the attack—if communication links have not been broken.

To this end, the U.S. is developing swarms of fixed-wing drones such as Perdix and Gremlin, which can travel long distances with missiles. DARPA has field-tested the coordination of swarms of aerial quadcopters (known for their high maneuverability) with ground vehicles, and the Office of Naval Research has demonstrated a fleet of 13 boats that can “overwhelm an adversary.” The China Electronics Technology Group, in a move that reveals the country's intentions, has (separately) tested a group of 200 fixed-wing drones, as well as 56 small drone ships for attacking enemy warships. In contrast, Russia seems to be mainly interested in tank swarms that can be used for coordinated attacks or be laid out to defend national borders.

Gaming the Enemy

The Petrov story also shows that although computers may be fast, they are often wrong. Even now, with the incredible power and speed of modern computing and sensor processing, AI systems can err in many unpredictable ways. In 2012 the Department of Defense acknowledged the potential for such computer issues with autonomous weapons and asserted the need to minimize human errors, failures in human-machine interactions, malfunctions, degradation of communications and coding glitches in software. Apart from these self-evident safeguards, autonomous systems would also have to be protected from subversion by adversaries via cyberattacks, infiltration of the industrial supply chain, jamming of signals, spoofing (misleading of positioning systems) and deployment of decoys.

In reality, protecting against disruptions by the enemy will be extremely difficult, and the consequences of these assaults could be dire. Jamming would block communications so that an operator would not be able to abort attacks or redirect weapons. It could disrupt coordination between robotic weapons in a swarm and make them run out of control. Spoofing, which sends a strong false GPS signal, can cause devices to lose their way or be guided to crash into buildings.

Decoys are real or virtual entities that deceive sensors and targeting systems. Even the most sophisticated artificial-intelligence systems can easily be gamed. Researchers have found that a few dots or lines cleverly added to a sign, in such a way as to be unnoticeable to humans, can mislead a self-driving car so that it swerves into another lane against oncoming traffic or ignores a stop sign. Imagine the kinds of problems such tricks could create for autonomous weapons. Onboard computer controllers could, for example, be fooled into mistaking a hot dog stand for a tank.

Most baffling, however, is the last directive on the Defense Department's list: minimizing “other enemy countermeasures or actions, or unanticipated situations on the battlefield.” It is impossible to minimize unanticipated situations on the battlefield because you cannot minimize what you cannot anticipate. A conflict zone will feature a potentially infinite number of unforeseeable circumstances; the very essence of conflict is to surprise the enemy. When it comes to AWSs, there are many ways to trick sensor processing or disrupt computer-controlled machinery.

One overwhelming computer problem the Department of Defense's directive misses, rather astonishingly, is the unpredictability of machine-machine interactions. What happens when enemy autonomous weapons confront one another? The worrisome answer is that no one knows or can know. Every AWS will have to be controlled by a top-secret computer algorithm. Its combat strategy will have to be unknown to others to prevent successful enemy countermeasures. The secrecy makes sense from a security perspective—but it dramatically reduces the predictability of the weapons' behavior.

A clear example of algorithmic confrontation run amok was provided by two booksellers, bordeebook and profnath, on the Amazon Web site in April 2011. Usually the out-of-print 1992 book The Making of a Fly sold for around $50 plus $3.99 shipping. But every time bordeebook increased its price, so did profnath; that, in turn, increased bordeebook's price, and so on. Within a week bordeebook was selling the book for $23,698,655.93 plus $3.99 shipping before anyone noticed. Two simple and highly predictable computer algorithms went out of control because their clashing strategies were unknown to competing sellers.

Although this mispricing was harmless, imagine what could happen if the complex combat algorithms of two swarms of autonomous weapons interacted at high speed. Apart from the uncertainties introduced by gaming with adversarial images, jamming, spoofing, decoys and cyberattacks, one must contend with the impossibility of predicting the outcome when computer algorithms battle it out. It should be clear that these weapons represent a very dangerous alteration in the nature of warfare. Accidental conflicts could break out so fast that commanders have no time to understand or respond to what their weapons are doing—leaving devastation in their wake.

On the Russian Border

Imagine the following scenario, one among many nightmarish confrontations that could accidentally transpire—unless the race toward AWSs can be stopped. It is 2040, and thousands of autonomous supertanks glisten with frost along Russia's border with Europe. Packs of autonomous supersonic robot jets fly overhead, scouring for enemy activity. Suddenly a tank fires a missile over the horizon, and a civilian airliner goes down in flames. It is an accident—a sensor glitch triggered a confrontation mode—but the tanks do not know that. They rumble forward en masse toward the border. The fighter planes shift into battle formation and send alerts to fleets of robot ships and shoals of autonomous submarines in the Black, Barents and White Seas.

After less than 10 seconds, NATO's autonomous counterweapons swoop in from the air, and attack formations begin to develop on the ground and in the sea. Each side's combat algorithms are unknown to its enemy, so no one can predict how the opposing forces will interact. The fighter jets avoid one another by swooping, diving and twisting with centrifugal forces that would kill any human, and they communicate among themselves at machine speeds. Each side has many tricks up its sleeve for gaming the other. These include disrupting each other's signals and spoofing with fake GPS coordinates to upset coordination and control.

Within three minutes hundreds of jets are fighting in the skies over Russian and European cities at near-hypersonic speed. The tanks have burst across the border and are firing on communications infrastructure, as well as at all moving vehicles at railway stations and on roads. Large guns on autonomous ships are pounding the land. Autonomous naval battles have broken out on and under the seas. Military leaders on both sides are trying to make sense of the devastation that is happening around them. But what can they do? All communications with the weapons have been jammed, and there is a complete breakdown of command-and-control structures. Only 22 minutes have passed since the accidental shooting-down of the airliner, and swarms of tanks are fast approaching Helsinki, Tallinn, Riga, Vilnius, Kyiv and Tbilisi.

Russian and Western leaders begin urgent discussions, but no one can work out how this started or why. Fingers are itching on nuclear buttons as near-futile efforts are underway to evacuate the major cities. There is no precedent for this chaos, and the militaries are befuddled. Their planning has fallen apart, and the death toll is ramping up by the millisecond. Navigation systems have been widely spoofed, so some of the weapons are breaking from the swarms and crashing into buildings. Others have been hacked and are going on killing sprees. False electronic signals are making weapons fire at random. The countryside is littered with the bodies of animals and humans; cities lie in ruins.

The Importance of Humans

A binding international treaty to prohibit the development, production and use of AWSs and to ensure meaningful human control over weapons systems becomes more urgent every day. A human expert, with full awareness of the situation and context and with sufficient time to deliberate on the nature, significance and legitimacy of the targets, the necessity and appropriateness of an attack and the likely outcomes, should determine whether or not the attack will commence. For the past six years the Campaign to Stop Killer Robots has been trying to persuade the member states of the U.N. to agree on a treaty. We work at the U.N. Convention on Certain Conventional Weapons (CCW), a forum of 125 nations for negotiating bans on weapons that cause undue suffering. Thousands of scientists and leaders in the fields of computing and machine learning have joined this call, and so have many companies, such as Google's DeepMind. At last count, 30 nations had demanded an outright ban of fully autonomous weapons, but most others want regulations to ensure that humans are responsible for making the decision to attack (or not). Progress is being blocked, however, by a small handful of nations led by the U.S., Russia, Israel and Australia.

At the CCW, Russia and the U.S. have made it clear that they are opposed to the term “human control.” The U.S. is striving to replace it with “appropriate levels of human judgment”—which could mean no human control at all, if that were deemed appropriate. Fortunately, some hope still exists. U.N. Secretary-General António Guterres informed the group of governmental experts at the CCW that “machines with the power and discretion to take lives without human involvement are politically unacceptable, are morally repugnant and should be prohibited by international law.” Common sense and humanity must prevail—before it is too late.