Imagine hosting a party. You arrange snacks, curate a playlist and place a variety of beers in the refrigerator. Your first guest shows up, adding a six-pack before taking one bottle for himself. You watch your next guest arrive and contribute a few more beers, minus one for herself. Ready for a drink, you open the fridge and are surprised to find only eight beers remaining. You haven't been consciously counting the beers, but you know there should be more, so you start poking around. Sure enough, in the crisper drawer, behind a rotting head of romaine, are several bottles.

How did you know to look for the missing beer? It's not like you were standing guard at the refrigerator, tallying how many bottles went in and out. Rather you were using what cognitive scientists call your number sense, a part of the mind that unconsciously solves simple math problems. While you were immersed in conversation with guests, your number sense was keeping tabs on how many beers were in the fridge.

For a long time scientists, mathematicians and philosophers have debated whether this number sense comes preinstalled or is learned over time. Plato was among the first in the Western tradition to propose that humans have innate mathematical abilities. In Plato's dialogue Meno, Socrates coaxes the Pythagorean theorem out of an uneducated boy by asking him a series of simple questions. Socrates's takeaway is that the boy had innate knowledge of the Pythagorean theorem all along; the questioning just helped him express it.

In the 17th century John Locke rejected this idea, insisting that the human mind begins as a tabula rasa, or blank slate, with almost all knowledge acquired through experience. This view, known as empiricism, in contrast to Plato's nativism, was later further developed by John Stuart Mill, who argued that we learn two plus three is five by seeing many examples where it holds true: two apples and three apples make five apples, two beers and three beers make five beers, and so on.

In short, empiricism dominated philosophy and psychology until the second half of the 20th century, when nativist-friendly thinkers such as Noam Chomsky swung the pendulum back toward Plato. Chomsky focused on language, proposing that children are born with an innate language instinct that enables them to quickly acquire their first language with little in the way of explicit instruction.

Others then extended Chomsky's hypothesis to mathematics. In the late 1970s cognitive scientists C. R. Gallistel and Rochel Gelman argued that children learn to count by mapping the number words in their language onto an innate system of preverbal counting that humans share with many other animals. In his landmark book The Number Sense, first published in 1997, French neuroscientist Stanislas Dehaene drew attention to the converging evidence for this preverbal system, helping researchers from diverse disciplines—animal cognition, developmental psychology, cognitive psychology, neuroscience, education—realize they were all studying the same thing.

In our 2021 paper in the journal Behavioral and Brain Sciences, we argued that there is no longer a serious alternative to the view that humans and many nonhuman animals have evolved a capacity to process numbers. Whereas Plato proposed that we have innate mathematical knowledge, or a capacity to think about numbers, we argue that we have innate mathematical perception—an ability to see or sense numbers. When you opened the fridge, it's not that you saw the beer bottles and made an inference about their number in the way that you saw Heineken labels and inferred that someone brought a pale lager from the Netherlands. Rather you saw their number much the way you perceived their shape and color.

But not everyone agrees with this emerging consensus, and a new wave of empiricism has emerged over the past decade. Critics who reject the existence of an ability to innately sense numbers highlight a broader and important scientific challenge: How could we ever know the contents of an infant's or a nonhuman animal's mind? As philosophers of cognitive science, we supplement thousands of years of philosophical thinking about this issue by drawing on a mountain of experimental evidence that simply was not available to past thinkers.

Emerging Evidence

Imagine you see two collections of dots flash on a computer screen in quick succession. There's no time to count them, but if you're like the thousands of people who have done this exercise in studies, you'll be able to tell which grouping had more dots if the two are sufficiently different. Although you might struggle to distinguish 50 from 51 dots, you'll be pretty good at recognizing that 40 dots are less numerous than 50. Is this ability innate or the product of years of mathematical education?

In 2004 a team of French researchers, led by Dehaene and Pierre Pica, took that question deep into the Brazilian Amazon. With a solar-powered laptop, Pica performed the same dot-flashing experiment with people from isolated Indigenous villages. People from this Indigenous group had the same ability to distinguish between sufficiently different numbers of dots, even though they had limited or no formal mathematical training and spoke a language in which precise number words went no higher than five.

Around the same time, a different group of researchers, including developmental psychologists Elizabeth S. Spelke and Hilary Barth, then both at Harvard University, used a modified dot-flash experiment to show that five-year-olds in Massachusetts also had this ability. One possible explanation is that the children weren't really tracking the number of dots but rather were focusing on some other aspect, such as the total area the dots covered on the screen or the total perimeter of the cluster. When one collection of dots was replaced with a rapid sequence of audible tones, however, the children determined which quantity was greater—that is, whether they had heard more tones or seen more dots—equally as well as in the dots-only experiment. The children couldn't have used surface area or perimeter for that comparison, because the tones didn't have those features. Nor do dots have loudness or pitch. The children weren't using sound duration, either: the tones were presented sequentially over variable durations, whereas the dots were presented all at once for a fixed duration. It seems that the five-year-olds really did have a sense for the number of dots and tones.

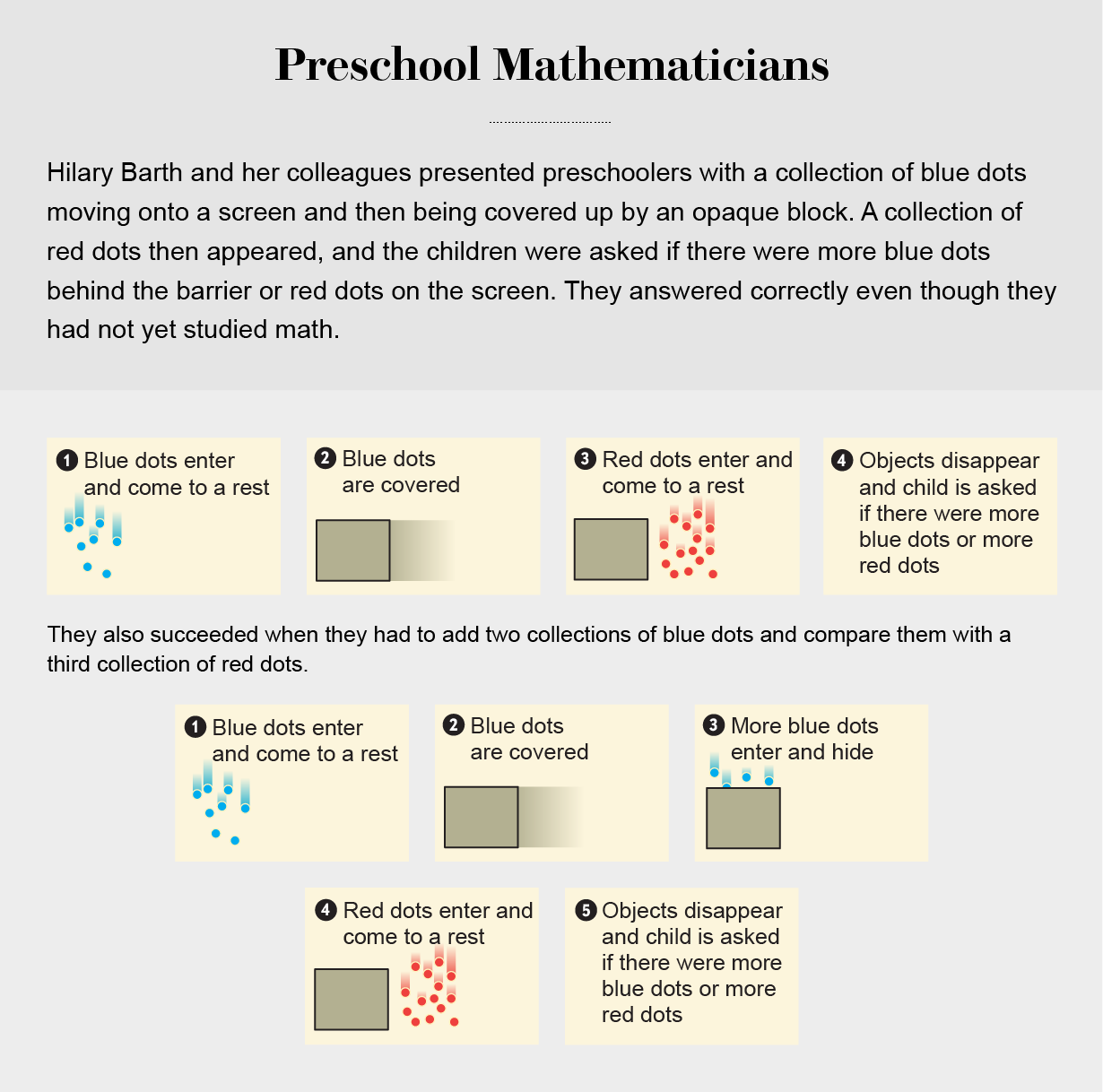

Barth and her colleagues proceeded to show that these numerical abilities support basic forms of arithmetic. In another experiment, the five-year-olds saw two collections of blue dots move behind an opaque block, one after the other. From that point on, none of the blue dots were visible. Then some red dots appeared beside the block. The children were asked whether there were more blue dots or red dots in total. They answered correctly, indicating that they could add the two groups of blue dots together even though they couldn't see them anymore and then compare their total to that of the red dots. In 2021 Chuyan Qu and her colleagues in Elizabeth Brannon's laboratory at the University of Pennsylvania took this further, showing that children as young as five can perform approximate multiplication—an operation that isn't taught until third grade in the U.S.

It's easy to wonder whether these five-year-olds had learned something about numbers from their adult caretakers who had learned math in school. But similar results have been found in a wide range of animal species. Wolves consider the size of their pack before deciding to hunt, preferring a group of two to six to attack an elk but a group of at least nine to take on a bison. Rats learn to press a lever a certain number of times in exchange for food. Ducks take account of how many morsels of food each of two people is throwing into a pond before deciding whom to approach. This behavior suggests that the number sense is evolutionarily ancient, similar to the ability to see colors or to feel warmth or cold.

These examples don't quite get at the question of whether the number sense is innate, though. Perhaps all they suggest is that formal schooling isn't necessary for humans or animals to learn to count. The ideal subjects for testing an innate number sense are newborn infants because they haven't had time to learn much of anything. Of course, they can't talk, so we can't ask them which of two collections contains more. They don't even crawl or reach. They are, however, capable of a simpler action: looking. By measuring where infants look and how much time they spend gazing there, scientists have discovered a window into their minds.

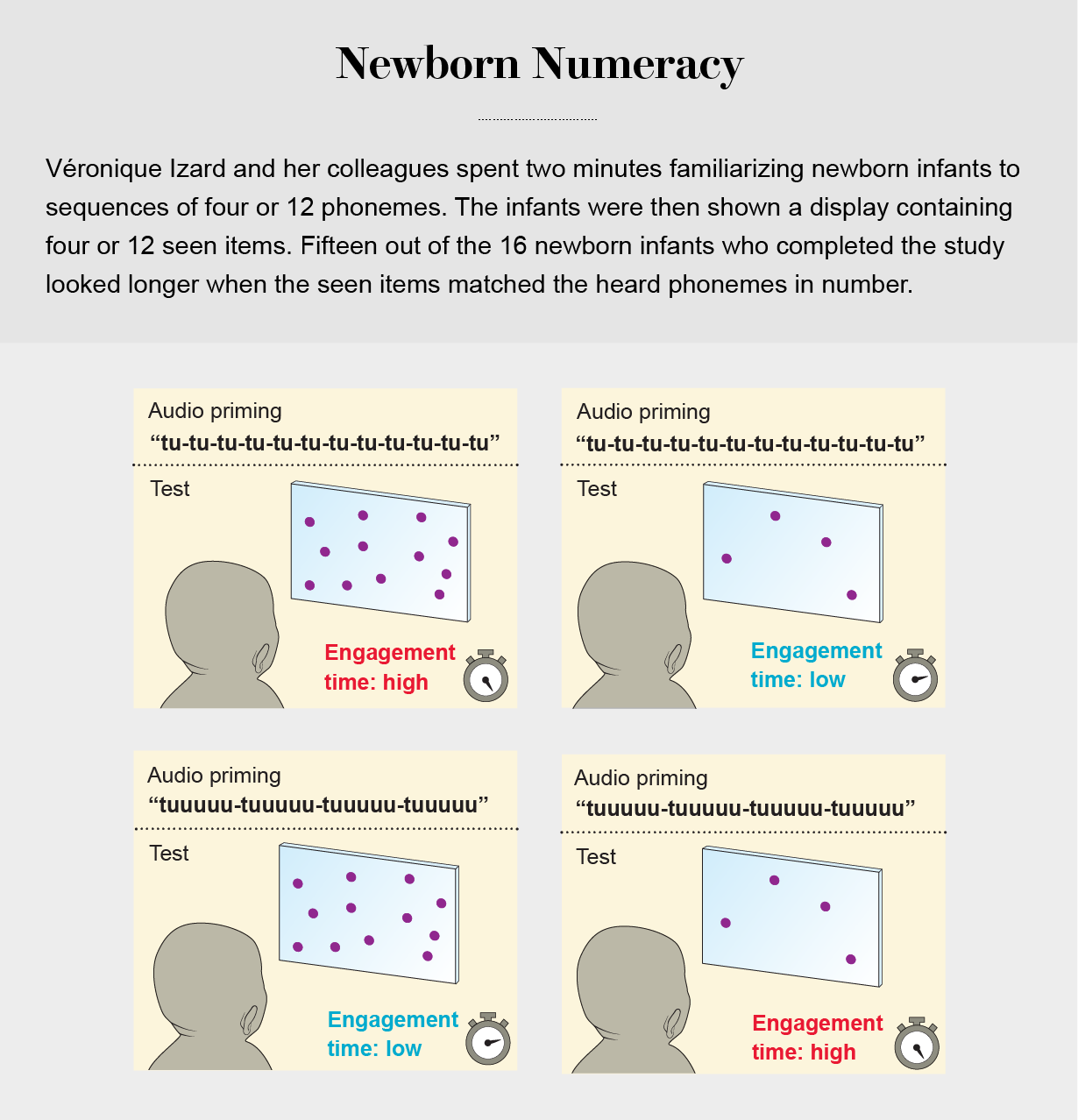

In a 2009 collaboration between Spelke and a team of researchers in France led by Véronique Izard and Arlette Streri, newborns at a hospital in Paris—all younger than five days old—listened for two minutes to auditory sequences containing either four sounds (“tuuu tuuu tuuu tuuu”) or 12 sounds. The researchers then presented the infants with a visual display containing four or 12 objects. Infants are known to like looking at familiar things, such as their mother's face. Izard and her colleagues reasoned that if the infants extracted numbers from the auditory stimuli, they would prefer to look at a display containing a matching number of items—four objects right after they had heard sequences containing four sounds or 12 objects right after hearing sequences containing 12 sounds. Thus, they should look longer at the display when it numerically matches the sounds than when it does not.

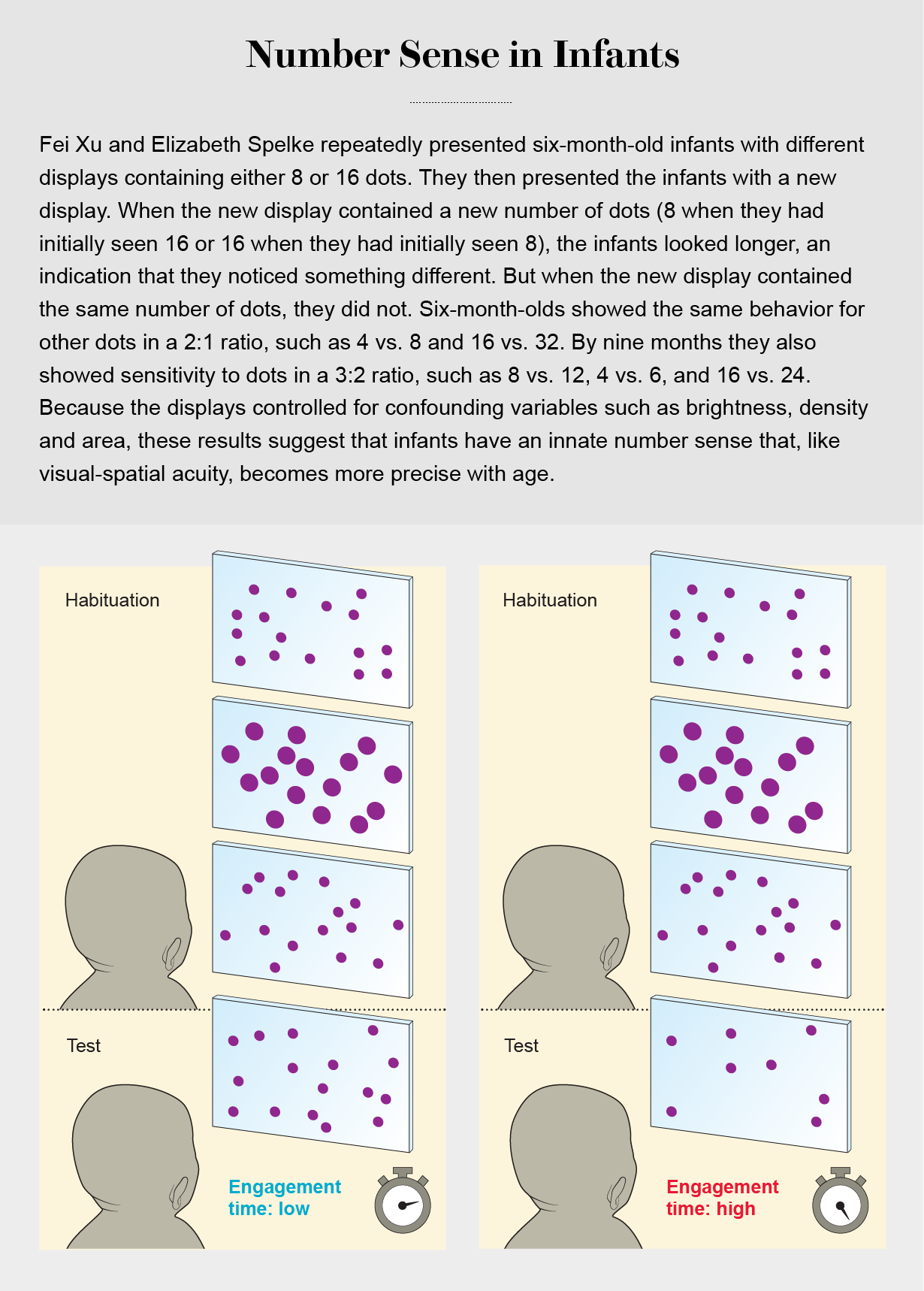

And that's exactly what Izard and her colleagues found. (Just as a smile doesn't always mean the same thing, nor does longer looking: Here newborns looked longer at a match in number, indicating coincidence, whereas the six-month-olds in the Number Sense in Infants graphic above looked longer at a change in number, reflecting surprise at the unexpected. In either case, reliable differences in looking behavior show that infants are sensitive to number.)

Critics such as Tali Leibovich of Haifa University and Avishai Henik of Ben-Gurion University of the Negev in Israel raised concerns about overinterpreting these results, given that newborns have poor eyesight. But the fact that this result held for 15 of the 16 infants tested who didn't succumb to sleep or fussiness is certainly suggestive.

Numbers vs. Numerals

Recall our suggestion that when you opened your fridge at the party, you saw how many beers were present in much the way you saw their shape and color. This wasn't thoughtlessly worded: You didn't see the beer bottles and then judge their number. Rather the number sense enabled you to see the number like you see colors and shapes.

To clarify this idea, it's first important to distinguish numbers from numerals. Numerals are symbols used to refer to numbers. The numerals “7” and “VII” are distinct, but both refer to the same number. The claim that you see numbers should not be confused with a claim that you see numerals. Just as seeing the word “red” is distinct from seeing the color red, seeing the numeral “7” is not the same as seeing the number seven.

Moreover, just as seeing the size of a beer bottle does not involve a symbol like “12 oz” popping up in your visual field, seeing the number of beers in the fridge does not involve seeing a numeral such as “7.” When you see the size of a beer bottle, it looks a certain way—a way that would change if the bottle got bigger or smaller. You can tell by looking that one bottle is bigger than another. Correspondingly, when you see how many beers there are, the beers look a certain way—a way that would change if there were more or fewer of them. Thus, you can tell, just by looking, whether there are more beers over here or over there.

Of course, even once numbers are distinguished from numerals, the concept of seeing numbers may still seem puzzling. After all, numbers are abstract. You can't point at them, because they aren't located in space, and your eyes certainly can't detect any light being reflected off them.

But the idea that you see numbers is not so different from the idea that you see shapes. Although you can see the sail of a boat as triangular, you cannot see a pure triangle on its own, independent of any physical objects. Likewise, although you can see some beers as being about seven in number, you cannot see the number seven all by itself. You can see shapes and numbers but only as attributes of an object or collection of objects that reflects light into your eyes.

So how can we tell when something is seen? If two lines appear on your COVID test, you might say that you can “see” that you have COVID. But that's loose talk. You certainly see the lines, but you merely judge that you have COVID. How can we draw this distinction scientifically?

There are many answers to this question, but one of the most helpful appeals to what is called perceptual adaptation. An example is the way a person's eyes eventually get used to the sun when they go picnicking on a sunny day. When that person later heads indoors, the bathroom appears dimly lit even when all the lights are on. After someone's eyes adapt to bright light, a normally lit room looks dark.

Adaptation is a hallmark of perception. If you can perceive something, you can probably adapt to it—including its brightness, color, orientation, shape and motion. Thus, if numbers are perceived, people should adapt to numbers, too. This is precisely what vision researchers David Burr of the University of Florence and John Ross of the University of Western Australia reported in a paper published in 2008.

Burr and Ross showed that if a person stares at an array of lots of dots, a later array containing a middling number of dots will appear less numerous than it would have otherwise. For instance, they found that after someone stared at 400 dots for 30 seconds, they saw a group of 100 dots as if it had just 30 dots. Therefore, in much the way that our eyes get used to the sun, they can get used to large numbers, leading to striking visual effects.

Other researchers, among them Frank Durgin of Swarthmore College, questioned whether the adaptation was to number as opposed to texture density (how frequently items appear in a given region of space). As a display of dots increases in number while the area it covers stays the same, it also increases in texture density. But a 2020 study by vision scientists Kevin DeSimone, Minjung Kim and Richard Murray teased these possibilities apart and showed that observers adapt to number independently of texture density. Strange as it sounds, humans see numbers.

Natural-Born Enumerators

Despite abundant evidence, contemporary empiricists—folks who follow in the tradition of Locke and Mill and believe that all mathematical knowledge is acquired through experience—remain skeptical about the existence of the number sense. After all, the ability to do arithmetic is traditionally considered a hard-won cultural achievement. Now we're supposed to believe babies do math?

Psychologists do have a checkered history when it comes to overinterpreting the numerical abilities of nonhuman animals. Undergraduate psychology majors are sternly warned of the “Clever Hans effect,” named after a horse that was prematurely credited with sophisticated arithmetic abilities (not to mention the ability to tell time and spell long words in German). It was later revealed that he was simply responding to subtle cues in his trainer's behavior. Researchers nowadays take great care to avoid inadvertently cueing their subjects, but that doesn't resolve everything.

Rafael Núñez of the University of California, San Diego, argues, for instance, that the number sense simply couldn't represent numbers, because numbers are precise: 30 is exactly one more than 29 and exactly one less than 31. The number sense, in contrast, is imprecise: if you see 30 dots flashed on a screen, you'll have a rough idea of how many there are, but you won't know there are exactly 30. Núñez concludes that whatever the number sense is representing, it cannot be number. As he put it in a 2017 article in Trends in Cognitive Sciences, “a basic competence involving, say, the number ‘eight,’ should require that the quantity is treated as being categorically different from ‘seven,’ and not merely treated as often—or highly likely to be—different from it.”

In our 2021 Behavioral and Brain Sciences article, we reply that such concerns are misplaced because any quantity can be represented imprecisely. You can represent someone's height precisely as 1.9 meters, but you can also represent it imprecisely as almost two meters. Similarly, you can represent the number of coins in your pocket precisely as five, but you can also represent it imprecisely as a few. You're representing height and number, respectively. All that changes is how you represent those quantities—precisely or imprecisely. Consequently, it's hard to see why the imprecision of the number sense should be taken to suggest that it's representing some attribute other than number.

This may seem like an issue of semantics, but it has a substantive implication. If we follow Núñez in supposing that the number sense doesn't represent numbers, then we need to say what it represents instead. And no one seems to have any good ideas about that. In many studies of the number sense, other variables—density, area, duration, height, weight, volume, brightness, and so on—have been controlled for.

Another reason to think the number sense concerns numbers (as opposed to height, weight, volume or other quantities) comes from late 19th-century German philosopher and logician Gottlob Frege. In his work on the foundations of arithmetic, Frege noted that numbers are unique in that they presuppose a way of describing the stuff they quantify. Imagine you point to a deck of cards and ask, “How many?” There's no single correct answer. We first need to decide whether we're counting the number of decks (one) or the number of cards (52), even though the 52 cards and the deck are one and the same.

Frege observed that other quantities aren't like this. If we want to know how much the cards weigh, we toss them on a scale and read off our answer. It won't make a difference to their weight if we think of them as a single deck or as a collection of cards. The same goes for their volume. The cards take up the same amount of space whether we describe them as one deck or 52 cards. (Of course, if we remove one card from the deck, it will have a different weight and volume than the full deck does. But then we're changing what we're describing, not just how we're describing it.) If the number sense is sensitive to how stuff is described, we can guess that it truly represents numbers and not other quantities.

This is precisely what we find when we apply Frege's insights. Work by a team of researchers led by Steven Franconeri of Northwestern University gives a vivid illustration. In a 2009 study, they presented subjects with a sequence of two screens containing circles and thin lines. Similar to many of the abovementioned experiments, subjects were asked which screen had more circles. They were also told to ignore the thin lines entirely. But when lines happened to connect two circles, effectively turning the pair of circles into a single dumbbell-shaped object, the subjects underestimated the number of circles on the screen. It seems they couldn't help but see a dumbbell as one object, even when they were trying to ignore the connecting lines and focus only on the circles.

The observers were not simply tracking some other quantity such as the total surface area of the items or the total number of pixels on the screen. After all, whether two circles and a line are connected to make a dumbbell doesn't affect the total surface area or pixel number. What it would, and seemingly did, affect is the perceived number of items in the display. So just as describing something as a deck of cards versus a collection of individual cards influences how you count it, whether you visually interpret something as a single dumbbell or a pair of circles influences how many items you seem to see—exactly as Frege would have predicted for a visual system that tracks number.

None of this is to deny that the mathematical abilities endowed by the number sense differ dramatically from the mature mathematical abilities most human adults possess. If you ask children for exactly 15 jelly beans, only ones who have learned to count in language can honor your request. But that's no reason to suppose their number sense isn't representing number. Just as children can perceive and discriminate distances long before they can think about them precisely, they have the ability to represent numbers before they learn to count in language and think of numbers precisely.

On their own, these innate mathematical abilities to perceive, add, subtract and operate over numbers are limited. But to understand how an infant develops into an Einstein, we must not underestimate babies' initial grasp of the world. To learn, we need something of substance to build on, and the number sense provides infants with part of the foundation from which new numerical abilities can arise—the ability to track coins and create monied economies, to develop modern mathematics or, more prosaically, to find those missing beers in the back of the fridge.