Psychologists study how humans make decisions by giving people “toy” problems. In one study, for example, my colleagues and I described to subjects a hypothetical disease with two strains. Then we asked, “Which would you rather have? A vaccine that completely protects you against one strain or a vaccine that gives you 50 percent protection against both strains?” Most people chose the first vaccine. We inferred that they were swayed by the phrase about complete protection, even though both shots gave the same overall chance of getting sick.

But we live in a world with real problems, not just toy ones—situations that sometimes require people to make life-and-death decisions in the face of incomplete or uncertain knowledge. Years ago, after I had begun to investigate decision-making with my colleagues Paul Slovic and the late Sarah Lichtenstein, both at the firm Decision Research in Eugene, Ore., we started getting calls about non-toy issues—calls from leaders in industries that produced nuclear power or genetically modified organisms (GMOs). The gist was: “We’ve got a wonderful technology, but people don’t like it. Even worse, they don’t like us. Some even think that we’re evil. You’re psychologists. Do something.”

We did, although it probably wasn’t what these company officials wanted. Instead of trying to change people’s minds, we set about learning how they really thought about these technologies. To that end, we asked them questions designed to reveal how they assessed risks. The answers helped us understand why people form beliefs about divisive issues such as nuclear energy—and today, climate change—when they do not have all the facts.

Intimations of Mortality

To start off, we wanted to figure out how well the general public understands the risks they face in everyday life. We asked groups of laypeople to estimate the annual death toll from causes such as drowning, emphysema and homicide and then compared their estimates with scientific ones. Based on previous research, we expected that people would make generally accurate predictions but that they would overestimate deaths from causes that get splashy or frequent headlines—murders, tornadoes—and underestimate deaths from “quiet killers,” such as stroke and asthma, that do not make big news as often.

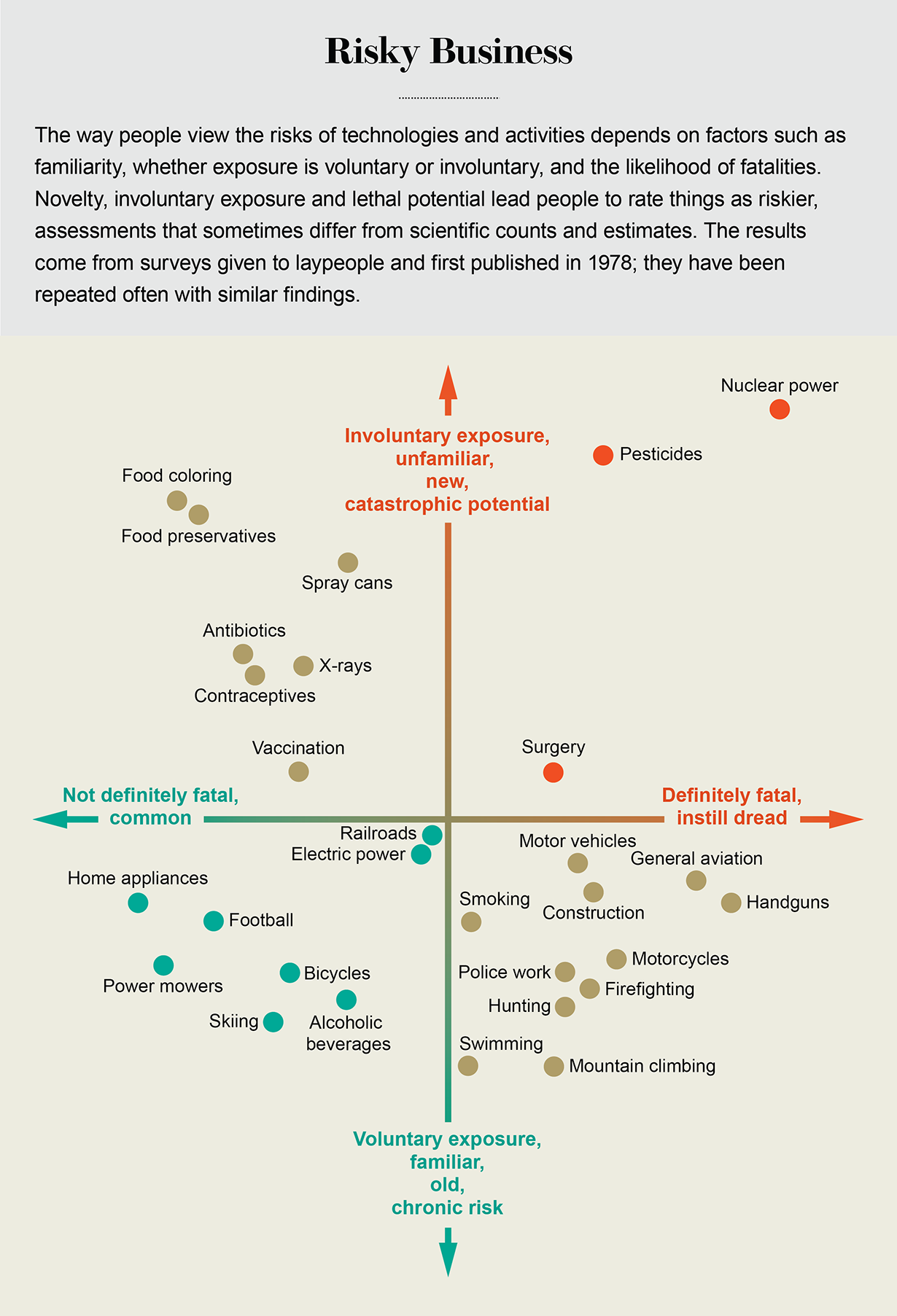

Overall, our predictions fared well. People overestimated highly reported causes of death and underestimated ones that received less attention. Images of terror attacks, for example, might explain why people who watch more television news worry more about terrorism than individuals who rarely watch. But one puzzling result emerged when we probed these beliefs. People who were strongly opposed to nuclear power believed that it had a very low annual death toll. Why, then, would they be against it? The apparent paradox made us wonder if by asking them to predict average annual death tolls, we had defined risk too narrowly. So, in a new set of questions we asked what risk really meant to people. When we did, we found that those opposed to nuclear power thought the technology had a greater potential to cause widespread catastrophes. That pattern held true for other technologies as well.

To find out whether knowing more about a technology changed this pattern, we asked technical experts the same questions. The experts generally agreed with laypeople about nuclear power’s death toll for a typical year: low. But when they defined risk themselves, on a broader time frame, they saw less potential for problems. The general public, unlike the experts, emphasized what could happen in a very bad year. The public and the experts were talking past each other and focusing on different parts of reality.

Understanding Risk

Did experts always have an accurate understanding of the probabilities for disaster? Experts analyze risks by breaking complex problems into more knowable parts. With nuclear power, the parts might include the performance of valves, control panels, evacuation schemes and cybersecurity defenses. With GMO crops, the parts might include effects on human health, soil chemistry and insect species.

The quality and accuracy of a risk analysis depend on the strength of the science used to assess each part. Science is fairly strong for nuclear power and GMOs. For new technologies such as self-driving vehicles, it is a different story. The components of risk could be the probability of the vehicle laser-light sensors “seeing” a pedestrian, the likelihood of a pedestrian acting predictably, and the chances of a driver taking control at the exact moment when a pedestrian is unseen or unpredictable. The physics of pulsed laser-light sensors is well understood, but how they perform in snow and gloom is not. Research on how pedestrians interact with autonomous vehicles barely exists. And studies of drivers predict that they cannot stay vigilant enough to handle infrequent emergencies.

When scientific understanding is incomplete, risk analysis shifts from reliance on established facts to expert judgment. Studies of those judgments find that they are often quite good—but only when experts get good feedback. For example, meteorologists routinely compare their probability-of-precipitation forecasts with the rain gauge at their station. Given that clear, prompt feedback, when forecasters say that there is a 70 percent chance of rain, it rains about 70 percent of the time. With new technologies such as the self-driving car or gene editing, however, feedback will be a long time coming. Until it does, we will be unsure—and the experts themselves will not know—how accurate their risk estimates really are.

The Science of Climate Science

Expert judgment, which is dependent on good feedback, comes into play when one is predicting the costs and benefits of attempts to slow climate change or to adapt to it. Climate analyses combine the judgments of experts from many research areas, including obvious ones, such as atmospheric chemistry and oceanography, and less obvious ones, such as botany, archaeology and glaciology. In complex climate analyses, these expert judgments reflect great knowedge driven by evidence-based feedback. But some aspects still remain uncertain.

My first encounter with these analyses was in 1979, as part of a project planning the next 20 years of climate research. Sponsored by the Department of Energy, the project had five working groups. One dealt with the oceans and polar regions, a second with the managed biosphere, a third with the less managed biosphere, and a fourth with economics and geopolitics. The fifth group, which I joined, dealt with social and institutional responses to the threat.

Even then, more than 40 years ago, the evidence was strong enough to reveal the enormous gamble being taken with our planet. Our overall report, summarizing all five groups, concluded that “the probable outcome is beyond human experience.”

Thinking of the Unthinkable

How, then, can researchers in this area fulfill their duty to inform people about accurate ways to think about events and choices that are beyond their experience? Scientists can, in fact, accomplish this if they follow two basic lessons from studies of decision-making.

Lesson 1: The facts of climate science will not speak for themselves. The science needs to be translated into terms that are relevant to people’s decisions about their lives, their communities and their society. While most scientists are experienced communicators in a classroom, out in the world they may not get feedback on how clear or relevant their messages are.

Addressing this feedback problem is straightforward: test messages before sending them. One can learn a lot simply by asking people to read and paraphrase a message. When communication researchers have asked for such rephrasing about weather forecasts, for example, they have found that some are confused by the statement that there is a “70 percent chance of rain.” The problem is with the words, not the number. Does the forecast mean it will rain 70 percent of the time? Over 70 percent of the area? Or there is a 70 percent chance of at least 0.01 inch of rain at the weather station? The last interpretation is the correct answer.

Many studies have found that numbers, such as 70 percent, generally communicate much better than “verbal quantifiers,” such as “likely,” “some” or “often.” One classic case from the 1950s involves a U.S. National Intelligence Estimate that said “an attack on Yugoslavia in 1951 should be considered a serious possibility.” When asked what probability they had in mind, the analysts who signed the document gave a wide range of numbers, from 20 to 80 percent. (The Soviets did not invade.)

Sometimes people want to know more than the probability of rain or war when they make decisions. They want to understand the processes that lead to those probabilities: how things work. Studies have found that some critical aspects of climate change research are not intuitive for many people, such as how scientists can bicker yet still agree about the threat of climate change or how carbon dioxide is different from other pollutants. (It stays in the atmosphere longer.) People may reject the research results unless scientists tell them more about how they were derived.

Lesson 2: People who agree on the facts can still disagree on what to do about them. A solution that seems sound to some can seem too costly or unfair to others.

For example, people who like plans for carbon capture and sequestration, because it keeps carbon dioxide out of the air, might oppose using it on coal-fired power plants. They fear an indirect consequence: cleaner coal may make mountaintop-removal mining more acceptable. Those who know what cap-and-trade schemes are meant to do—create incentives for reducing emissions—might still believe that they will benefit banks more than the environment.

These examples show why two-way communication is so important in these situations. We need to learn what is on others’ minds and make them feel like partners in decision-making. Sometimes that communication will reveal misunderstandings that research can reduce. Or it may reveal solutions that make more people happy. One example is British Columbia’s revenue-neutral carbon tax, which provides revenues that make other taxes lower; it has also received broad enough political support to weather several changes of government since 2008. Sometimes, of course, better two-way communication will reveal fundamental disagreements, and in those cases action becomes a matter for the courts, streets and ballot boxes.

More Than Science

These lessons about how facts are communicated and interpreted are important because climate-related decisions are not always based on what research says or shows. For some individuals, scientific evidence or economic impacts are less important than what certain decisions reveal about their beliefs. These people ask how their choice will affect the way others think about them, as well as how they think about themselves.

For instance, there are people who forgo energy conservation measures but not because they are against conservation. They just do not want to be perceived as eco-freaks. Others who conserve do it more as a symbolic gesture and not based on a belief that it makes a real difference. Using surveys, researchers at Yale Climate Connections identified what they call Six Americas in terms of attitudes, ranging from alarmed to dismissive. People at those two extremes are the ones who are most likely to adopt measures to conserve energy. The alarmed group’s motives are what you might expect. Those in the dismissive group, though, may see no threat from climate change but also have noted they can save money by reducing their energy consumption.

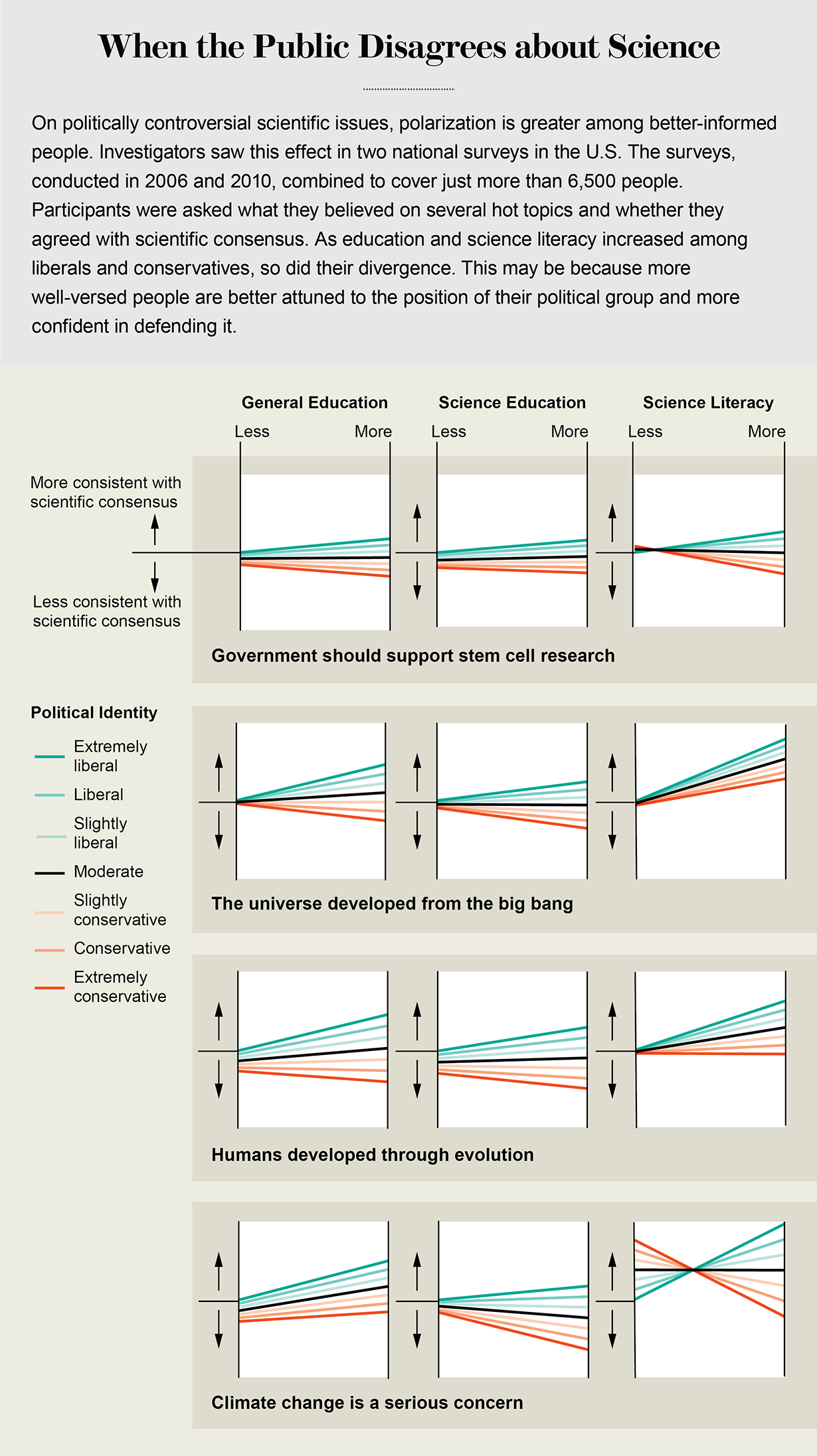

Knowing the science does not necessarily mean agreeing with the science. The Yale study is one of several that found greater polarization among different political groups as people in the groups gained knowledge of some science-related issues. In our research, Caitlin Drummond, currently a postdoctoral fellow at the University of Michigan’s Erb Institute, and I have uncovered a few hints that might account for this phenomenon. One possible explanation is that more knowledgeable people are more likely to know the position of their affiliated political group on an issue and align themselves with it. A second possibility is that they feel more confident about arguing the issues. A third, related explanation is that they are more likely to see, and seize, the chance to express themselves than those who do not know as much.

When Decisions Matter Most

Although decision science researchers still have much to learn, their overall message about ways to deal with uncertain, high-stakes situations is optimistic. When scientists communicate poorly, it often indicates that they have fallen prey to a natural human tendency to exaggerate how well others understand them. When laypeople make mistakes, it often reflects their reliance on mental models that have served them well in other situations but that are not accurate in current circumstances. When people disagree about what decisions to make, it is often because they have different goals rather than different facts.

In each case, the research points to ways to help people better understand one another and themselves. Communication studies can help scientists create clearer messages. And decision science can help members of the public to refine their mental models to interpret new phenomena. By reducing miscommunication and focusing on legitimate disagreements, decision researchers can help society have fewer conflicts and make dealing with the ones that remain easier for us all.